Making A Peer Review System for My Blogs Using Google-ADK & Mem0

I needed an automation peer review my blogs, so I used Google-ADK and Mem0 to create an end to end system.

My Process

When writing my technical blogs, I have a very rigid process I like to follow.

- Research the topic I am interested in

- Create a structured research roadmap I require to gain knowledge about the particular topic

- Go through the roadmap and try to learn/research the concepts as in-depth as I can

- Start coding whatever the relevant implementation for that topic is

- Finally, start writing the blog

But one thing always bugs me, "Is my blog factually correct and have I compromised the integrity of my blog anywhere?".

That leads me to frantically go through my sources repeatedly and asking tools like Perplexity about the blog. So, I had the idea to automate this process by a creating a Peer Review System.

What This System Does

1. What the System Focuses On

- It behaves like a technical editor, not just a grammar checker.

- It evaluates writing for:

- Structure

- Clarity

- Factual accuracy

- Tone correctness

- Proper use of supporting evidence

- The purpose is to help the writer produce content that is accurate, readable, and consistent.

2. How It Reviews Content

- The system doesn’t read content blindly.

- It uses uploaded reference files as a knowledge base.

- Relevant information from those files is retrieved using semantic search rather than keyword matching.

- If a statement appears in the writing:

- The system first checks if it exists in the uploaded sources.

- If confirmed, the system becomes more confident in that claim.

- If not found, it triggers an external web-based fact check.

3. How Memory Improves Review Quality

- Feedback adapts over time instead of resetting with each review.

- The system tracks repeated mistakes or patterns such as:

- Missing citations

- Style inconsistencies

- Formatting issues

- If the same issue shows up again, the system highlights it more firmly.

- This turns the review into a learning process rather than a one-time correction.

Screenshots:

- https://drive.google.com/file/d/1VTvQBkQ4753NbpVFlVPr5v6_kjH3SXjZ/view?usp=sharing

- https://drive.google.com/file/d/14sfHrC0Lw0pvU4oX7U18Ydv61a8RNTu0/view?usp=sharing

A Demo Peer Review Report:

Workflow

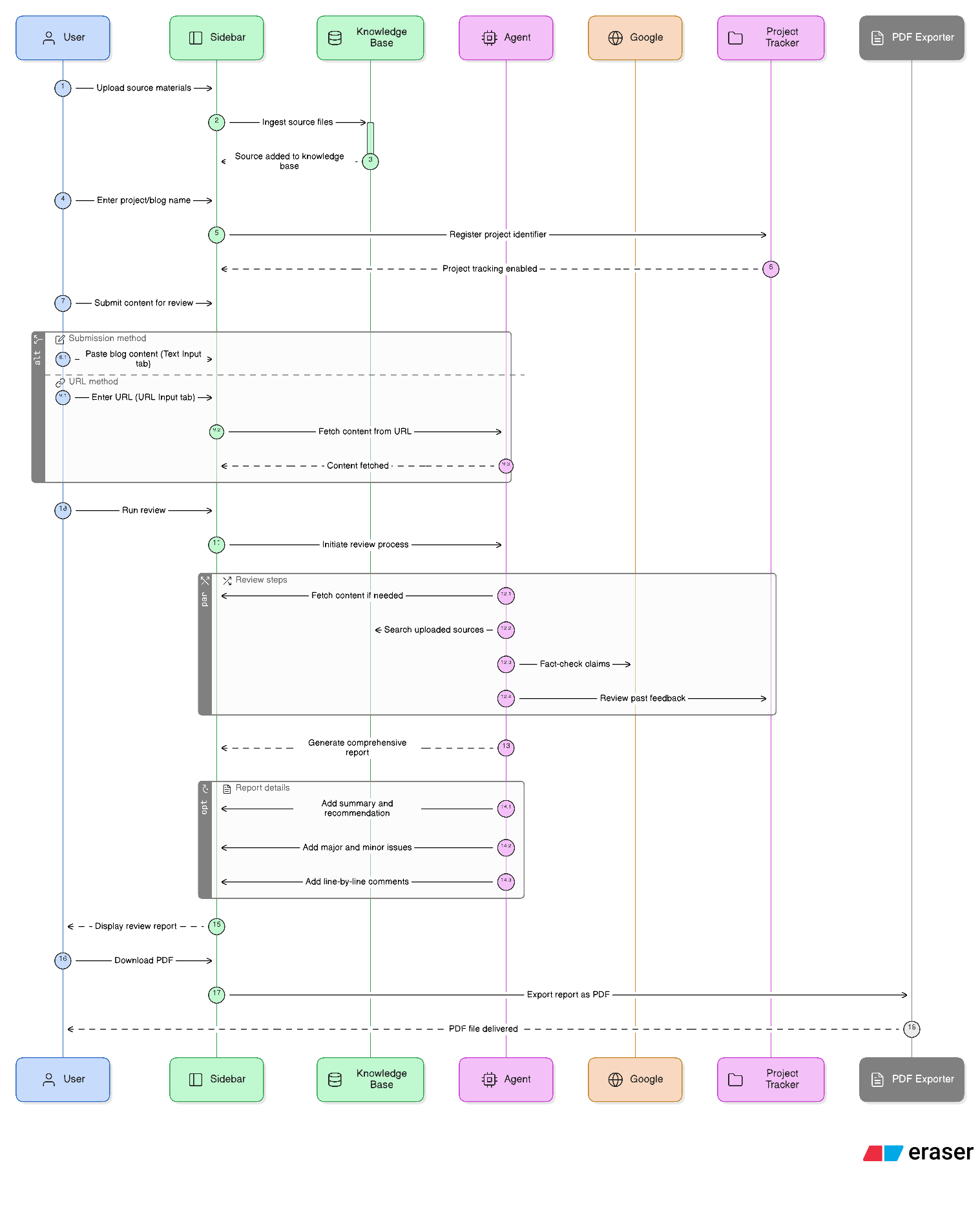

Phase 1: Ingestion

- Fetches content from URLs if needed

- Loads past review history for the project

- Examines uploaded source documents

Phase 2: Verification

- Identifies all factual claims in the content

- Searches uploaded sources for supporting evidence

- Uses Google search for external fact-checking

- Validates technical assertions and statistics

Phase 3: Evaluation

- Assesses clarity, flow, and structure

- Checks accuracy against evidence

- Evaluates tone for target audience

- Compares to past feedback to track improvement

- Flags recurring issues with escalated severity

Phase 4: Synthesis

- Generates structured report

- Provides evidence for all major issues

- References past feedback when relevant

- Gives actionable, constructive feedback

Features

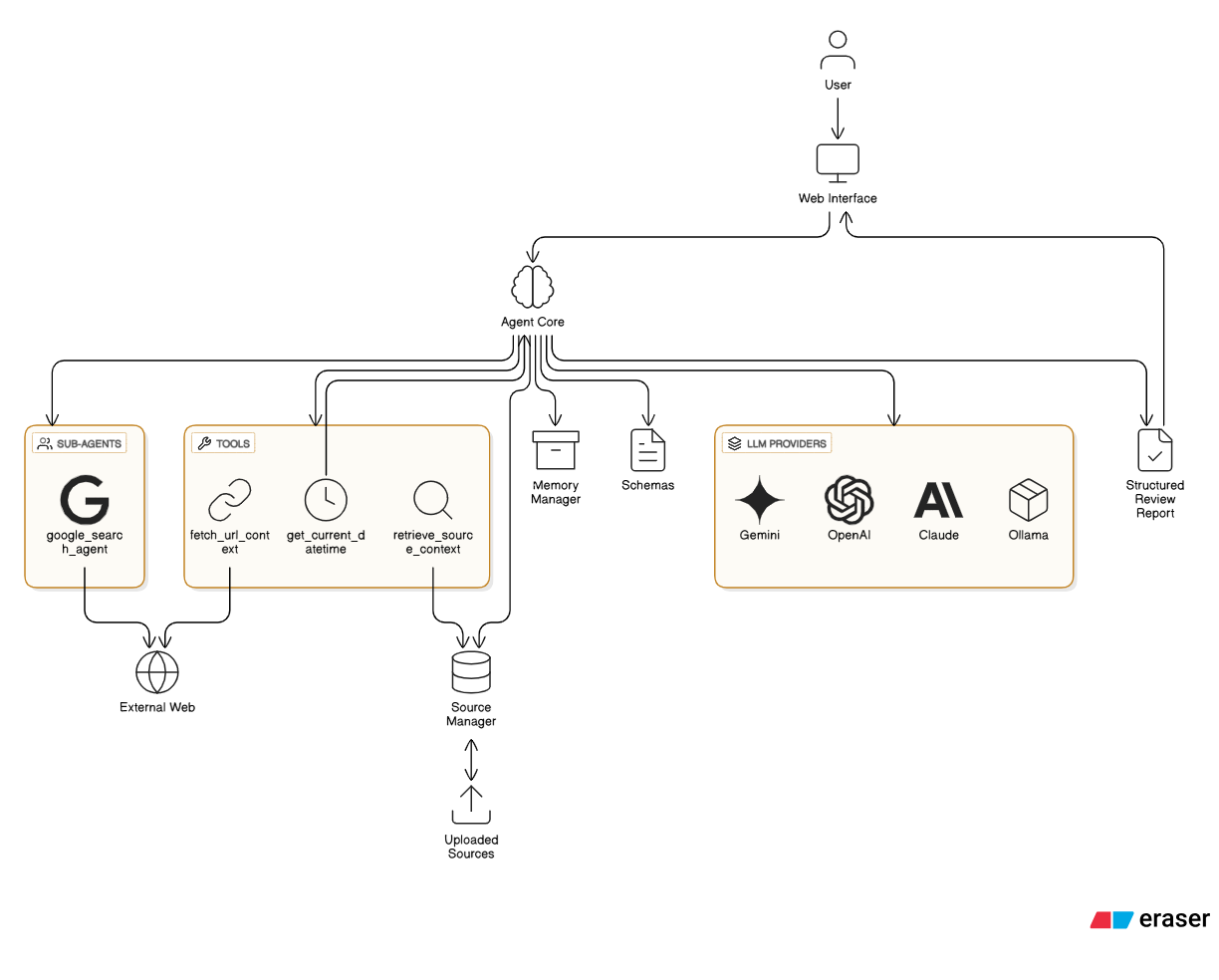

1. Model Flexibility

- You aren’t locked into one AI provider.

- Switching between models like Gemini, Claude, GPT, or Ollama only requires changing one environment variable.

- This gives control over:

- Cost

- Performance

- Privacy

- The review logic remains consistent across models.

2. Context-Aware Retrieval

- Uploaded reference files are stored in a vector database.

- The system breaks them into chunks, embeds them, and indexes them for efficient search.

- During review, it retrieves relevant sections using semantic similarity rather than simple keyword matching.

- This helps the system understand meaning, not just matching exact text.

3. Automated Fact Verification

- When a claim isn’t supported by uploaded sources, the system escalates verification.

- A separate search agent performs a structured web lookup.

- The goal is not to rewrite content, but to confirm whether the information is reliable and accurate.

4. Built-In Memory

- The system remembers past reviews and writing patterns.

- If a mistake repeats, the system identifies it as a recurring issue.

- Instead of pointing it out repeatedly at the same level, the feedback becomes stronger and more specific.

- This encourages long-term improvement rather than one-off corrections.

Limitations

- The verification is only as good as the model plus the search results

- Source reliability isn’t enforced

- Web search can surface low quality or outdated material

- The model is still the final judge. It can misinterpret sources, over trust weak evidence, or fabricate justification